Debate on Scaling Language Models for AI Development by 2040

The text discusses the potential for scaling up language models to lead to the development of powerful artificial intelligence (AI) by 2040. It presents a debate between a believer and a skeptic regarding the feasibility of scaling and its implications for achieving artificial general intelligence (AGI). The skeptic raises concerns about the scarcity of high-quality language data and the challenges of achieving self-play and synthetic data learning. On the other hand, the believer argues that the ease of AI progress so far suggests that synthetic data could work and that the models have the potential for insight-based learning. The text also debates whether scaling has worked so far, with the believer citing consistent performance scaling on benchmarks and the skeptic questioning the generality of the benchmarks. The conclusion presents the author's tentative probabilities: 70% for scaling, algorithmic progress, and hardware advances leading to AGI by 2040, and 30% for the skeptic's viewpoint that scaling might not work.

- The text presents a debate between a believer and a skeptic regarding the potential for scaling up language models to lead to the development of powerful artificial intelligence (AI) by 2040.

- The skeptic raises concerns about the scarcity of high-quality language data and the challenges of achieving self-play and synthetic data learning.

- The believer argues that the ease of AI progress so far suggests that synthetic data could work and that the models have the potential for insight-based learning.

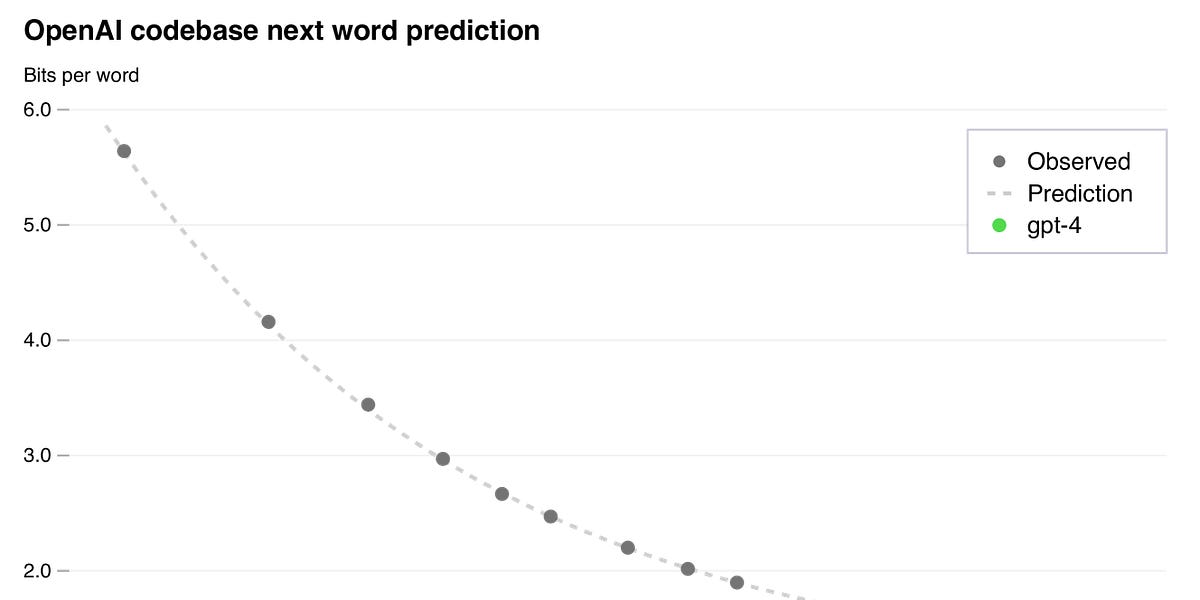

- The text also debates whether scaling has worked so far, with the believer citing consistent performance scaling on benchmarks and the skeptic questioning the generality of the benchmarks.

- The conclusion presents the author's tentative probabilities: 70% for scaling, algorithmic progress, and hardware advances leading to AGI by 2040, and 30% for the skeptic's viewpoint that scaling might not work.